How do Large Language Models Represent Information?

How do Large Language Models Represent Information?

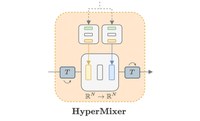

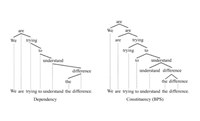

Pretrained LLMs have demonstrated impressive abilities, but it is hard to understand how they work or how well they will generalise to a new domain. Idiap researchers are developing a model of how information is represented inside LLMs. By identifying and removing unreliable information, this model can improve generalisation to new domains, without the need or any additional training.

Natural Language Understanding

Natural Language Understanding